The current offline speech recognition system used in MHC_Talker is theSherpaNcnn:

- GitHub project address:

👉 https://github.com/k2-fsa/sherpa-ncnn - Example models and documentation:

👉 https://k2-fsa.github.io/sherpa/

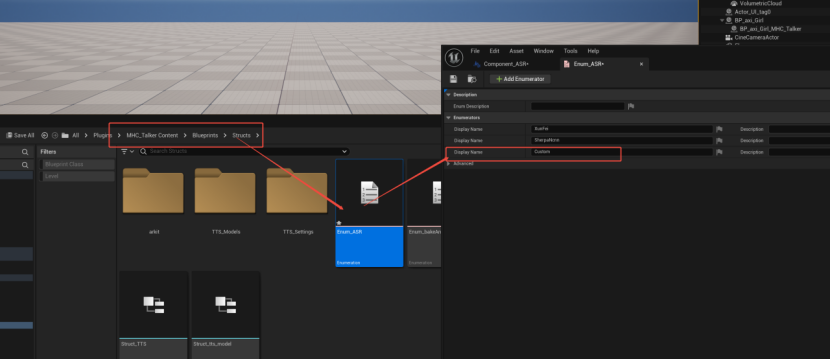

If you wish to change or customize the speech recognition model, you can edit Component_ASR under Plugins > MHC_Talker Content > Blueprints > Component path:

1,Introduction to Tone Recognition Programs #

Two voice recognition systems are integrated within the current component:

offline identification: SherpaNcnn

Online Recognition: KU Xunfei

2,How to add custom voice recognition #

If you wish to access your own custom recognition model in addition to your existing recognition solution, follow the process below:

Add a Custom item under Enumerator

Access the corresponding function under Custom

3,Access steps using Xunfei Speech Recognition as an example #

The following is an example of accessing Xunfei speech recognition to illustrate the specific operation process:

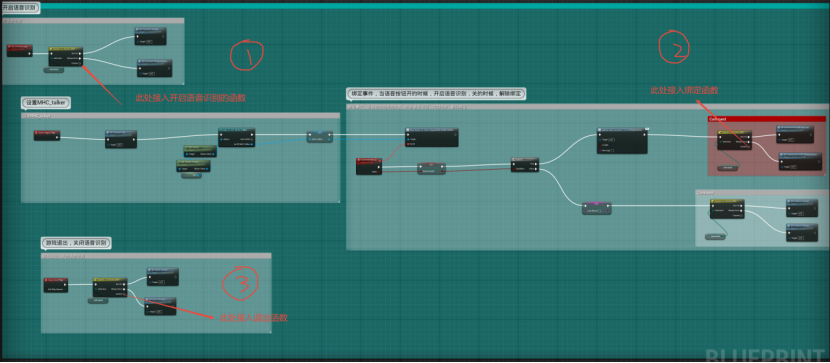

Step 1: Create ED_connect_xunfei function #

Used to initiate speech recognition while the program is running.

The function Func Check Xun Fei Key is used to check whether the API ID and API KEY are valid.

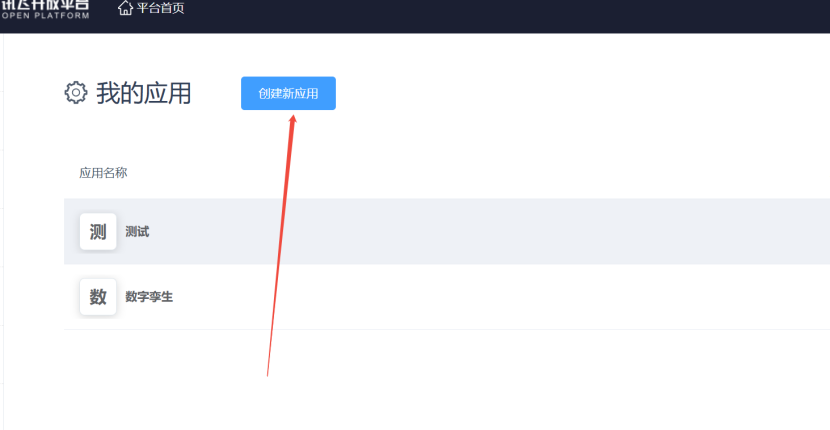

If you don't have an API ID, go to Xunfei official website Register for an account and operate from the console:

As shown in the figure: create a new application;

Add "Real-time Speech Transcription Standard Edition" to the new application and click "Apply for Purchase" to get a chance to test it for free.

Connect Channel function (C++ implementation) is used to connect to XF server. Pause XF indicates that the program needs to pause the sound function when it starts.

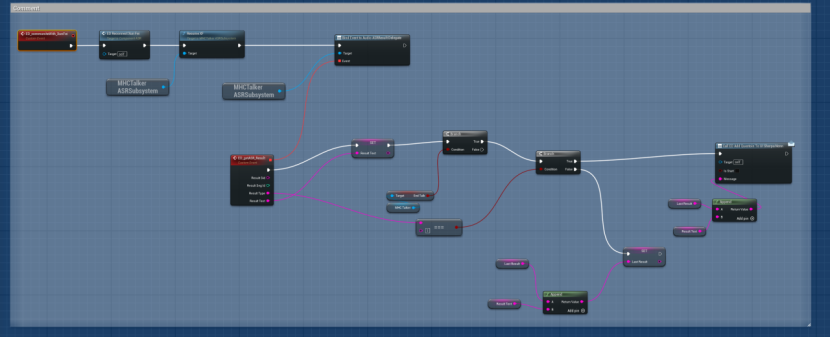

Step 2: Create ED_communiteWith_XunFei Function #

Used to bind events, return recognition results, and print the results to the UI.

Includes ED Reconnect Xunfei, which automatically reconnects when Xunfei does not detect audio within 15 seconds to avoid recognition shutdown.

Resume XF is used to reactivate the microphone.

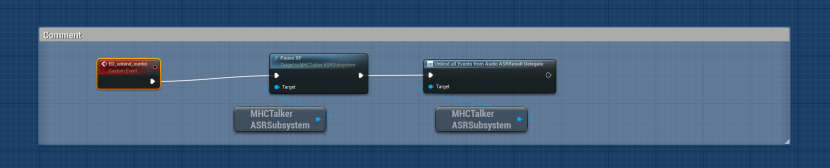

Step 3: Create ED_unbind_xunfei function #

Used to unbundle events and prevent computer voices from being recognized and sent to the UI when a digital person speaks.

Implemented to turn off audio and unbind recognition events.

Step 4: Create ED_close_xunfei Function #

Enables safe exit from voice recognition, disconnecting from Cyberoam.

Summarize the process #

The overall process is as follows:

- Connected Speech Recognition -- Choose from programs such as SherpaNcnn or Xunfei.

- Binding Voice Recognition -- Output recognition results and print to UI

- Unbundle Speech Recognition -- No need to recognize characters' voices during conversations, avoiding interruptions

- Turn off voice recognition -- Securely disconnect recognition when exiting a game

End