MHC Talker

AI tools for digital humans that bring Metahuman to life

MHC Talker

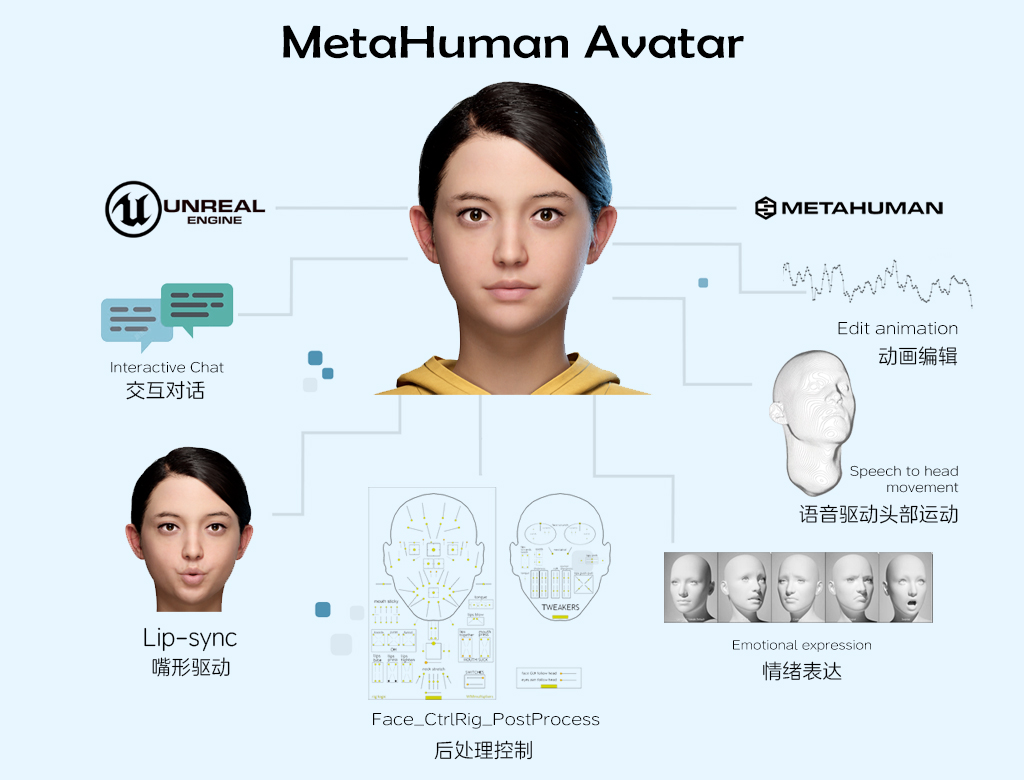

Call AnimaCore API in Unreal Engine to drive high quality dialog for Metahuman characters in real time. One-click configuration of intelligent dialog characters, features include: text or voice dialog, real-time lip-sync driving, post-processing fine-tuning of expressions, voice re-reading of natural head movements, body movement dialog logic driving, animation data recording and more.

In addition, the DNA and BS modifications of character assets are particularly important to the character's animation performance, ensuring that the character's DNA and BS modifications conform to official MetaHuman standard specifications;

One-Click Metahuman Configuration

Dual-channel text/voice input

Real-time oral reasoning synchronization

Speech repetition linked to head micro-movements

Post-processing expression fine-tuning control

Conversational logic-driven body movement libraries

MHC Talker Demo

Real-time interactive dialog

3D digital human real-time dialog

Features include:

- AI real-time voice lip synchronization

- Character Background Setting

- Physical Expression Logical Behavior Driven

- Voice Emotion Driven Emoji

- Post-processing fine-tuned expressions

- A wide range of voice types to choose from

- ... ...

Excellent language generalization ability

F.A.Q.

common problems

At present, MetaHuman standard program is the best choice for general-purpose binding, at this stage, only MetaHuman standard binding program is supported, and more program support will be launched later.

Support, MHC Talker can receive text and voice, send text or voice to the plug-in interface can be, the specific operation can be viewed in the relevant documents.

Currently MHC Talker only supports Unreal Engine and will consider updating the Unity version in the future.

Support for Unreal Engine versions 5.3, 5.4, 5.5, continuously updated.

Currently supports both Windows and Linux.

Packaged Windows, Linux and Android are currently supported.